What is artificial intelligence and what isn't?

Most people who talk about AI haven't spent much time thinking about what it means. What exactly is intelligence as an attribute of a software system?

The phrase “artificial intelligence” is always good for clicks. It has an aura of magic and mystery. AI beats humans at games, creates art, fools us into thinking we are chatting with another consciousness. However, it has no definite meaning. I have seen it used to connote “I don’t understand how that piece of software works, and possibly the people who made it don’t either.” I believe this only creates confusion, and promotes the belief that some systems are more human-like than others. The question is, why and when should we talk about a machine having intelligence?

Let’s start with a simple game like tic-tac-toe. We know that the first player should not lose, and it’s easy to see how to play a perfect game. The minimax algorithm is the typical way to solve it. The space of possible states in a tic-tac-toe game is tiny, and computers have been able to explore quickly since the early days. I could write a Sudoku solver that uses brute-force search. That’s not the way I would do it by hand, of course. The approach to solving games with tractable search spaces has been to make algorithms that are very dumb but also fast. Now, are these two systems examples of artificial intelligence? In a literal sense, one could answer yes. The programmer has encoded intelligent rules into an automated system. A diligent human, even if not particularly smart, could execute those rules by hand given sufficient time. It’s also obvious that there is no thinking involved on the part of the computer. It’s blindly following deterministic algorithms that will stop working if the rules of the game change. If this is intelligent, so is a mechanical watch.

Likewise, one can build a web search engine with the same procedure that would create the index in a book. This was my job over twenty years ago. Early search engines were large tables listing every page containing words like “cat” or “teledildonics.” The space grew from millions to billions of pages, and the only way to build this index frequently enough (say every two or three weeks) was to keep throwing hardware at it. When people discovered that they could exploit search engines by inserting irrelevant keywords, an arms race began. At that point Google had an insight: the internet is shaped by the collective intelligence of its users. Could we aggregate the signal contained in hyperlinks to decide what sites are more relevant? If a page with millions of inbound links points to another with the text “Amazon” this increases the probability that this is the page a user wants when entering the word “Amazon” in the search box. Now we can ask the question, is this improved version of search artificially intelligent? I would say no more than the previous one. It is harnessing more human knowledge than before, but it’s just as easy to fool it. Simply create link farms that point to each other, and you have reset the game. However, collective intelligence is now table stakes in the arms race.

We could ask similar questions for spam filtering using Bayesian algorithms. As you click the “this is spam” button, you feed a probability table with the words that appeared in the text. The likelihood that an email is spam because it contains the words “viagra” and “enlargement” increases based on your actions. Is this system intelligent? It is taking advantage of your intelligence in discriminating spam from legit messages. However, someone who understands probabilities can figure out the trick and try to game it. If your filter hasn’t seen the word “v1agra” then the message will pass. You will flag it and the Bayesian weights will update, but the spammer can stay ahead with creative misspellings. Of course one can counter with more heuristics (edit distance, whatever). The arms race continues.

Fast forward 15 or 20 years to the time of neural networks. Because these systems contain the word “neural” a layperson might believe that there is intelligence at play. Those who actually understand what neural networks do are not fooled by the name. Even though the concept was inspired by the brain, in reality they are glorified matrix multipliers. They are systems composed of billions of cells containing values that change over time. The changes happen as they try to minimize the error in classifying or generating data. Recent gains in storage capabilities and speed of parallel computation allow them to process giant datasets of human-generated content. However, everyone who has used a chatbot or an image generator knows that they are as good as the data they were fed. If DALL-E, Stable Diffusion or GPT3 generate content that seems novel, it’s not the result of a human-like creative process. It’s the blending of quantifiable points in the concept space represented by an equation like “king - man + woman = queen.”

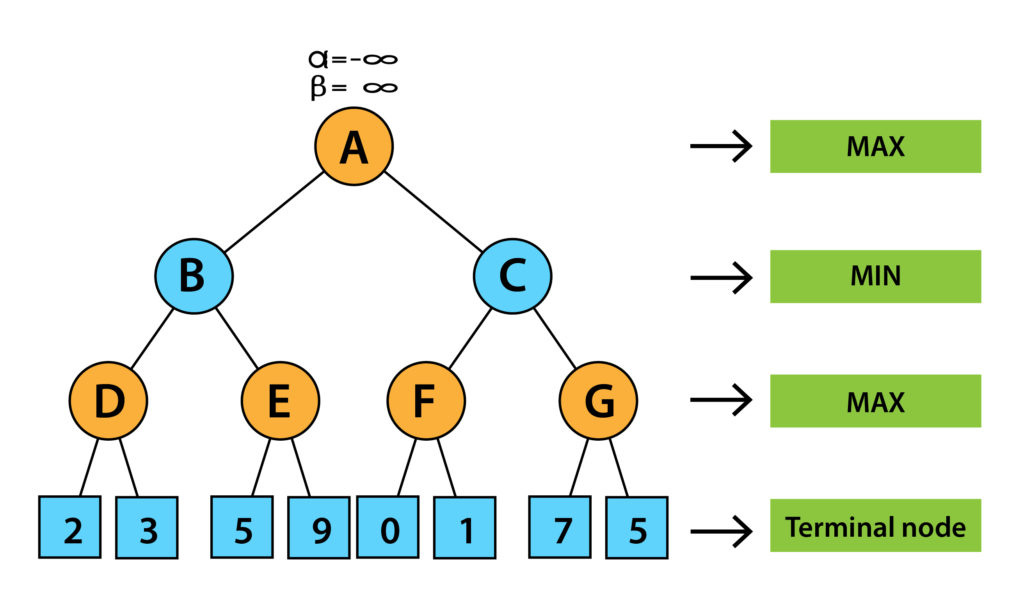

Now, are these generative approaches an example of artificial intelligence? If you argue that they are, then at what point did they become so? What makes DALL-E more intelligent than a Minimax tic-tac-toe player, or an alpha-beta pruning chess engine?

It is true that some of these systems use machine learning, in that the algorithms implemented by the programmers will not solve any problems until the weights and biases have been tuned by sufficient iterations. However, is a matrix of tuned weights and biases intelligent? Is this the path to creating an entity that can navigate the world, make sense of the inputs it receives, and use them to solve problems in the way of higher-level goals? So far, no signs indicate that this is so. There are still many aspects not yet covered (or at least not well enough) by current approaches.

For example, humans have haphazard and evolving goals that emerge from our circumstances, in many cases unpredictable. We are not necessarily optimizing for any of them at a given time. They come and go, the priorities change. Every decision we make is the result of a complex voting process by disparate systems shaped by evolution. We know to avoid certain dangerous things like falling off a cliff just like cats and dogs do, without having to learn them. We know not to sit on a bench with a “wet paint” sign on it because we learn to interpret written language, and also that getting paint on our butt cheeks does not normally increase our life satisfaction.

To complicate matters even more, some people throw in a G for general intelligence. Unfortunately nobody has a good definition for what that would be. Humans are far from generally intelligent, our cognitive skills are pretty narrow compared to what we can imagine. We cannot remember even a few megabytes of data. We are aware of some of our cognitive biases yet we cannot modify our firmware to make them go away. We know that it is possible to reason about things, but we can only do it a fraction of the time. Perhaps in the next few decades we will stumble upon ways to build systems that will match or surpass our current capabilities in every domain of interest to us. It’s tempting to look at the current state of the art and think “wow, we are close to full machine intelligence.” For the most part, this is a reflection of how easy it is to fool us. The appearance of artificial intelligence exploits our natural stupidity.

Do you think the para "...For example, humans have haphazard..." is exactly what "intelligence" is? It is unique to each person, based on their objectives or goals, which change frequently as a result of their collective experience?